The Divide

The AI revolution in business is stuck in paradox. Getting organizations excited about artificial intelligence is easy. Making it work in practice? That’s where things fall apart.

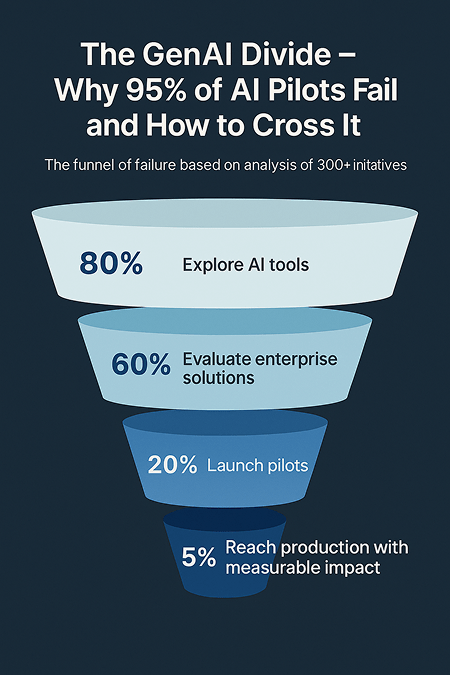

Companies are pouring $30–40 billion into generative AI, yet an MIT NANDA study – The GenAI Divide: State of AI in Business 2025 – finds that 95% of enterprise pilots deliver zero measurable return.

The study analyzed 300+ initiatives, 52 organizational interviews, and 153 senior leader surveys. The findings reveal a funnel of failure:

- 80% of organizations explore AI tools

- 60% evaluate enterprise solutions

- 20% launch pilots

- Only 5% reach production with measurable impact

Large enterprises run the most pilots but take nine months on average to scale, compared to just 90 days for mid-market firms. The result: billions spent, billions wasted.

This isn’t a technology failure. It’s an execution failure.

Note: As noted above, MIT’s findings come from 52 organizational interviews and 300+ initiatives – a significant but not exhaustive sample. Success definitions vary, and some projects may need longer observation periods to show full impact. Nonetheless, this trend represents billions of dollars of investment that fail to achieve the desired result. Whether 95% or slightly lower, it’s essential to unpack why that is.

Why AI Keeps Falling Short

The Learning Gap

Unlike people, who change and adapt through feedback, today’s AI systems don’t improve with use. They don’t retain feedback, adapt to edge cases, or learn context over time unless directly prompted. Users report:

- “It doesn’t learn from our feedback” (60%)

- “Too much manual context required” (55%)

- “Can’t customize to workflows” (45%)

- “Breaks in edge cases” (40%)

The problem isn’t raw model capability; GPT-5 and Claude can generate dazzling outputs. The problem is that enterprise rollouts strip away context, feedback, and adaptability, leaving static tools where dynamic systems are needed.

The Employee-Enterprise Disconnect

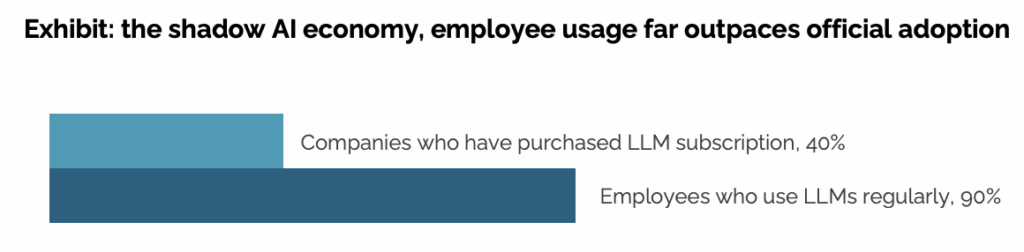

This gap fuels a shadow AI economy, where over 90% of employees secretly use personal tools like ChatGPT at work – often with higher ROI than official enterprise deployments. The fact that individuals can bridge this divide while organizations can’t speaks to the fact that employees may be better at understanding where to focus AI tools on a specific process or step, while enterprises are trying to replace large, complex, and established workflows in one fell swoop.

But individual learning is also shaping how employees perceive enterprise AI, making their expectations even higher. The study explains, “This shadow usage creates a feedback loop: employees know what good AI feels like, making them less tolerant of static enterprise tools.”

Instead of a barrier to adoption, the most successful AI initiatives leveraged the expertise of these early adopters:

Many of the strongest enterprise deployments began with power users, employees who had already experimented with tools like ChatGPT or Claude for personal productivity. These “prosumers” intuitively understood GenAI’s capabilities and limits, and became early champions of internally sanctioned solutions. Rather than relying on a centralized AI function to identify use cases, successful organizations allowed budget holders and domain managers to surface problems, vet tools, and lead rollouts. This bottom-up sourcing, paired with executive accountability, accelerated adoption while preserving operational fit.

The Rollout Trap

We know that success is possible; So why do so many companies stumble? Research points to three reinforcing causes:

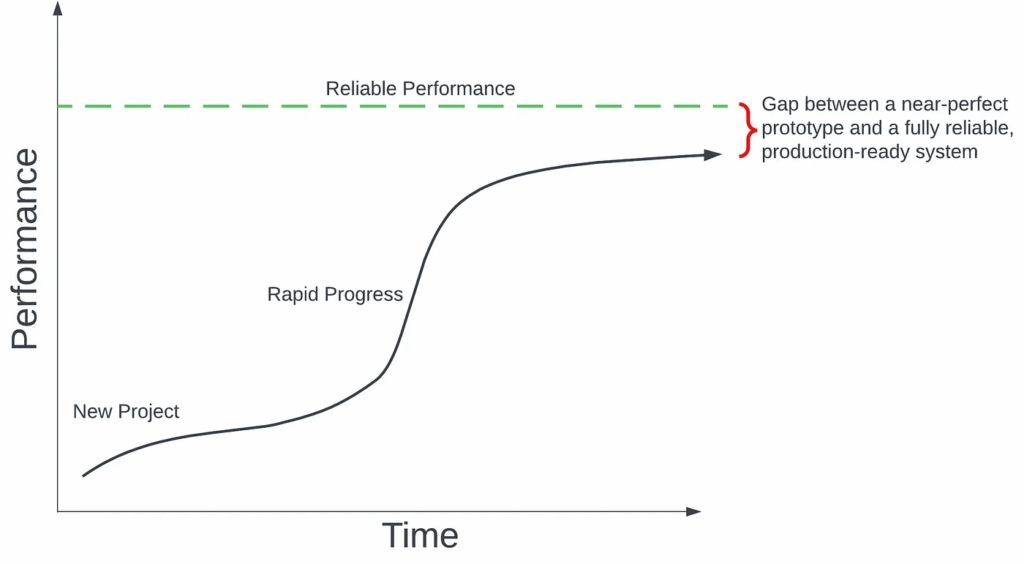

- Vendor Hype → Inflated Expectations: Flashy demos convince executives pilots = production. With AI-assisted coding and mockup tools like Cursor and Vercel V0, it’s easier than ever to spin up a polished prototype that looks great and may even be somewhat usable, but still falls far short of what’s needed in a secure, scalable, production system.

- ROI Fixation on Quick Wins: Leaders chase headcount savings instead of building durable capability.

- Ignoring Context Complexity: GenAI shines in creative marketing but fails in compliance, customer service, or regulated workflows.

One CIO explains, “We’ve seen dozens of demos this year. Maybe one or two are genuinely useful. The rest are wrappers or science projects.”

Within the study, a VP of Procurement at a Fortune 1000 pharmaceutical company expressed this challenge clearly: “If I buy a tool to help my team work faster, how do I quantify that impact? How do I justify it to my CEO when it won’t directly move revenue or decrease measurable costs? I could argue it helps our scientists get their tools faster, but that’s several degrees removed from bottom-line impact.”

This also points to how success was measured in the study, purely through the eyes of direct revenue creation. This led organizations to focus on more visible sales and marketing initiatives, while ignoring back office use cases that may have yielded better ROI with lower risk of impacting customers.

With 95% of AI Projects Failing, You Must Rethink Your Approach

Agree with the theory, but need tangible steps to put it into practice? See how to operationalize this approach in our latest whitepaper.

How to Cross the Divide

The best companies don’t treat AI as a switch to flip. They treat it as a muscle to build. Here’s how:

- Start small → One clear use case with realistic outcomes and a realistic time period.

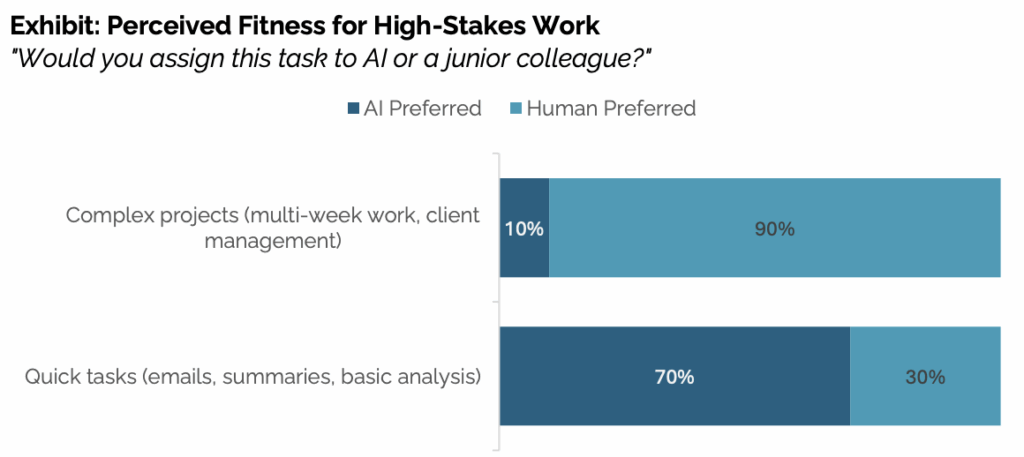

- Focus on scale-friendly tasks → High-volume, low-risk work (summarization, pattern detection, workflow assistance) instead of mission-critical, complex decisions

- Blend human + AI → Pairing boosts productivity by 30–45% (McKinsey)

- Measure meaningful impact → CSAT, retention, resolution quality — not just deflection rates.

- Leverage proven, external solutions → Partnerships succeed 67% of the time vs. 33% in-house development

Mistakes vs. What Leaders Do Instead

Common Mistake | What Successful Leaders Do |

Vague goals like “cut costs” | Define one clear use case with attainable outcomes |

“AI will replace all agents” | AI handles low-complexity tasks, humans the complex ones — shift over time |

ROI = Deflection rate | ROI = CSAT, AHT, retention |

Deploy fast and wide | Pilot narrow use cases to learn safely |

Expect full transformation in weeks | Build stepwise learnings into long-term success |

🔥 Hot tips to avoid 95% AI project failure 🔥

Already deployed AI and want to get your project back on track? Here are three steps you can take this week:

- Audit your current AI pilots: Are they solving real problems or chasing buzzwords? Could you break larger initiatives into smaller milestones?

- Survey your team about their “shadow AI” usage: What are they accomplishing with personal tools? What do they need from an enterprise AI tool in order to adopt and trust it?

- Focus on a realistic, unsexy quick win: Pick one high-volume, low-risk process for a focused pilot with clear success metrics. Look for back office use cases that impact the bottomline more than your headlines.

Building for the Future

The future isn’t machines replacing us. It’s machines amplifying human capabilities. As, Google CEO, Sundar Pichai puts it:

“The future of AI is not about replacing humans, it’s about augmenting human capabilities.”

Forward-thinking companies are preparing for an agentic future — AI systems that retain context, adapt, and collaborate across workflows. That means investing in:

- Context retention layers that carry organizational knowledge

- Feedback loops that apply corrections systematically

- Workflow integration to embed AI into existing processes

- Adaptive architectures that evolve based on use

Researchers estimate an 18-month horizon before vendor lock-in makes strategic positioning harder. Early adopters who close the learning gap will capture lasting advantage.

Bottom Line

AI isn’t failing us. We’re failing to use it right.

The real questions for leaders:

- What are the biggest problems we need to solve?

- Where can AI realistically help within existing workflows today?

The GenAI Divide isn’t crossed in one leap – it’s bridged one successful implementation at a time. Shift from silver-bullet moonshots to focused, capability-building initiatives – you won’t just run impressive demos – you’ll finally cross the Divide.