Look, AI is finally doing real work in customer support! The future is here! But if you’re expecting some kind of plug-and-play miracle, I have bad news: this is less “push button, receive bacon” and more “train new employee (with zero personality) who happens to be made of math.”

Your LLM needs an adult, not just an audit

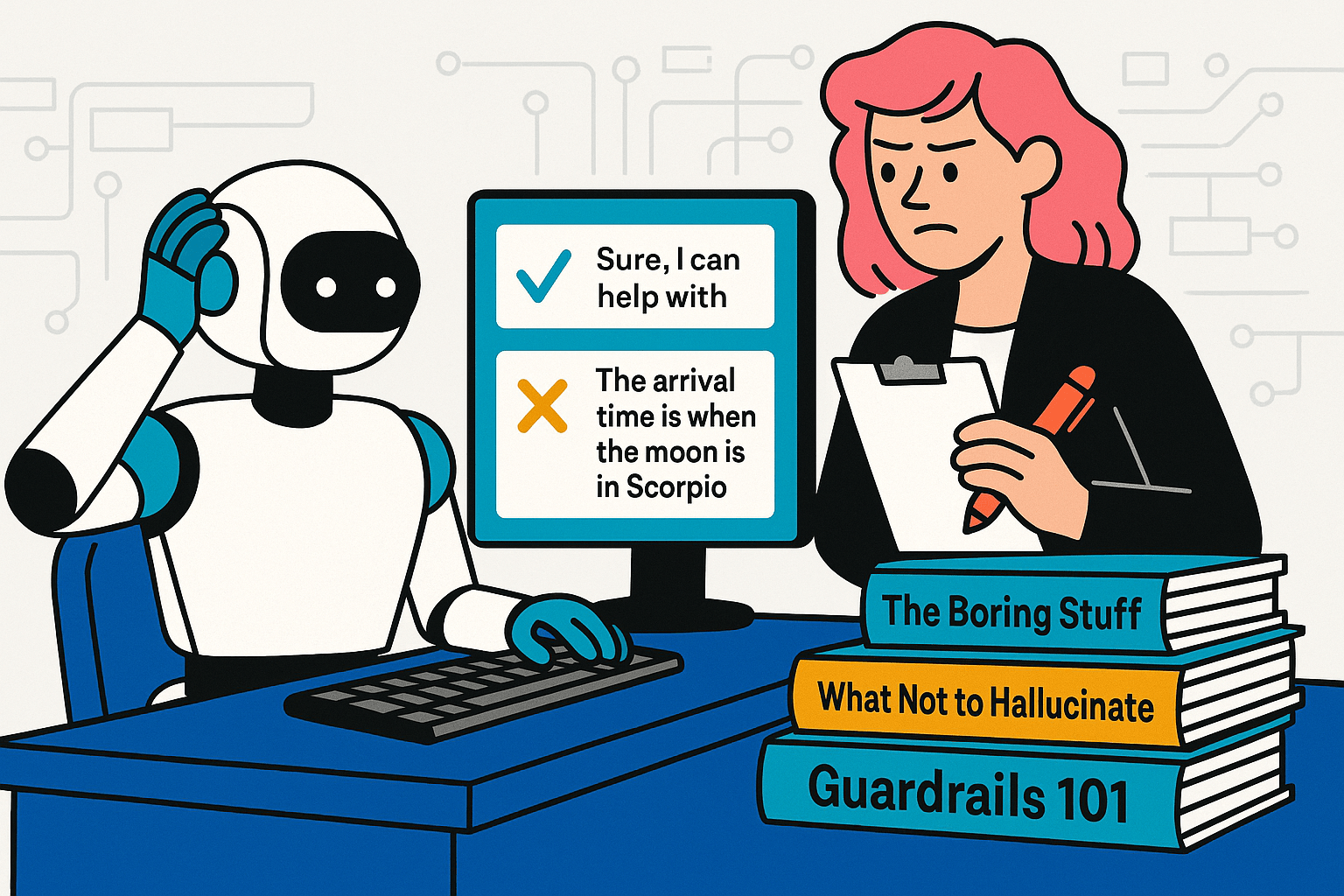

Yes, GPT-5 can write you a sonnet about return policies that can bring you to tears. But it can also confidently hallucinate a refund process you absolutely don’t offer and tell a customer their order will arrive “whenever the moon is in Scorpio.”

You need guardrails. Hard rules for the non-negotiables (no, we will not ship to North Korea, I don’t care how politely the customer asks). Sentiment models so you know when someone’s about to lose it. And then your LLM for everything in between. It’s a system of controls, not a magic switch.

If you don’t measure everything, you measure nothing

Sure, it sounds like fortune cookie logic. But here’s the thing nobody wants to hear: if you’re not scoring your AI agent conversations the same way you score your human ones, you’re just vibes-based management with extra steps. It’s clear now that AI agents are going to take more and more volume, so you need to build one view, not manage two sets of metrics.

Track the same things for both: what’s the reason for contact, was the issue resolved, how did they come into the conversation, what was their sentiment when they left? When you measure humans and bots with the same ruler, it becomes painfully obvious where each one face-plants.

Forecasting Your AI-to-Human Agent Ratio

Get a clear framework that tells you where you should apply AI agents – and where human agents work best – to maximize your AI investment without sacrificing customer experience quality.

Real numbers from people who actually did this

Calendly cut handle time by 3 minutes and dropped cost per case by 23%. They did it by starting with agent assist, adding QA, then layering in analytics.

Notice what they didn’t do: buy an AI solution, slap it on their website, and declare victory. They iterated. For months. While measuring everything. Revolutionary, I know.

They’re now taking that knowledge and making their AI agent better at deflecting straightforward issues. This strikes the right balance of experience and effectiveness without the typical AI agent trial and error.

What AI is genuinely good at

Robots are fantastic at the stuff that makes humans want to not show up for work tomorrow. Password resets? AI loves those. Insurance policy checks at 3 AM? Amazing for a bot. Anything that requires doing the exact same steps in the exact same order 900 times a day? AI will do it without slowly losing the will to live.

And unlike your team lead who’s “just needs a day,” AI can apply your policies consistently. Same answer at 2 PM on Monday and 2 AM on Sunday. It doesn’t get hangry. It doesn’t have opinions about how stupid your return policy is, even when your return policy is stupid. But remember, constraints need to be in place so it doesn’t have to “guess” what your policy is. Because when it guesses, people get a $70,000 SUV for $1.

What still needs an actual human

Once you automate the easy stuff, what’s left in the queue? The weird ones. The customer who has a legitimate problem that your documentation didn’t anticipate because reality is chaos. The upset person who needs someone with actual empathy, not simulate empathy. The gray-area policy call that requires judgment.

Your average handle time (AHT) may initially go up because the humans are only getting the hard ones now. But if you’re collecting intelligence on what your customer issues are and how best to handle them, you’ll be able to incorporate them into your process and expedite or even eliminate issues before they happen.

You could also make a tradeoff between AHT and volume. It’s better to spend time solving issues than have your customer drive up your costs by contacting you on every available channel. Or worse, never contact you and churn. So how do you make better use of your AI.

Start with boring, specific problems

Pick something concrete and repetitive: “Reset password after we’ve verified it’s actually them.” Or “Process a return when it’s within the window and the tags are still on.” Using metrics, not just hunches, you can uncover which issues have the “least turbulent” sentiment journey, a manageable number of exchanges, and low incidents of escalations, or other outliers.

Prioritize issues that meet this criteria, launch it, watch it go in ways you didn’t anticipate, fix those missteps, and repeat. Thrilling stuff. Also: the only way this works.

Stop doing these things (please)

Stop buying AI point solutions and launching them like the last person to have AI loses. Stop chasing “100% automation” – especially if your business has high-touch problems that need a human brain. And finally stop treating your analytics dashboard like your 401k, checking that it’s still there once a quarter. It should be driving decisions on what to automate next, not collecting dust.

A plan with the highest likelihood of success

- Pick three boring, repetitive issues. Write them down. If you can’t explain the use case in two sentences, it’s not ready.

- Define what success looks like, what failure looks like, and when the AI should bail out. Use examples, not corporate poetry.

- Turn on analytics for everyone – AI and humans both. Day one. No excuses.

- Every week, review what broke. Fix root causes. Ship updates. Repeat until you’re bored.

- Only then – only then – add the next use case.

The actual point

AI agents aren’t a cheat code. They’re a team member who needs training, oversight, and honest performance reviews. Mix your tools thoughtfully, measure obsessively, and keep shipping small fixes.

Do that, and you’ll see the only two metrics that matter move in the right direction: the easy stuff gets handled faster, and your humans can focus on the complicated problems that actually require, you know, humanity. Which is still most of what builds customer trust, in case anyone forgot.